There has been a lot of discussion around generative AI, especially surrounding how it is trained. While creators and artists have often raised concerns about infringement when large amounts of work created by humans are taken into the dataset to train a model, proponents of generative AI have often stated that using art to train an AI model is fair use. But is it?

Luckily the United States Copyright Office has weighed in with another report, this time focusing on generative AI training. Here we will take a look at their conclusions and recommendations on fair use doctrines, and whether training an AI model constitutes fair use.

How is AI Trained?

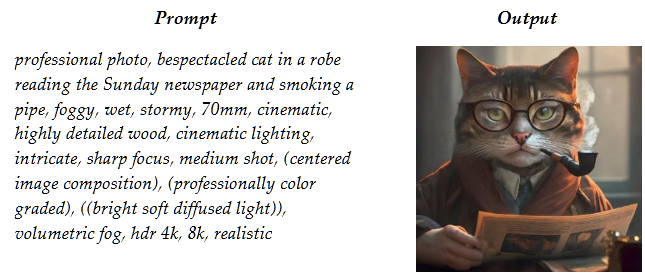

The first topic to understand is how AI works and how it is trained. To avoid the technical nitty-gritty, the important part for our discussion is that generative AI models are trained on existing works created by humans. These works could be text, art, video, or even music.

By creating a dataset, these models can create a statistical model of inputs and expected outputs, which is called training data. This is different from traditional programs where there are specifically programmed rules. From this, these models can do things like predict text for ChatGPT or create images.

The first problem with training data is the sheer scale of it. In order for these generative AI models to function, they need millions or even billions of works in order to be able to develop general purpose models instead of just task-specific ones. More data leads to better performance. While there are experiments that would allow generative AI models to function with less data, they would still be in the scale of millions.

The second challenge with training data is that the input data must be high quality in order for these models to function. For one example, older texts that are often in the public domain tend to lead to worse performance on modern language tasks. Other sources that might be available can reflect certain biases or contain other content that would make the model unusable. In the end, a model is only as good as the training data. Garbage in, garbage out.

As far as copyright goes, this involves the rights of reproduction among other rights that are exclusively held by the copyright owner. That means that on its own, using works protected by copyright is infringement.

This is where the debate over fair use comes from. A common practice to train AI models is to just download data (which is “publicly available”) from the internet, but just because it is on the internet does not mean that it is licensed for whatever you want to use it for.

Proponents of generative AI warn that requiring AI companies to license copyrighted works would stop what could be a transformative technology, and that it is not possible to obtain all of the licenses for the volume and diversity of content that would be needed to create these systems.

On the other hand, unlicensed training would be bad for creators, since their work could be incorporated into a generative AI model without their permission, which would infringe on several of their rights in the work they created that are otherwise protected by copyright.

In the absence of laws on the topic, fair use comes into question. If training an AI model on copyrighted works is fair use, then it is a defense against what would otherwise be infringement.

What is Fair Use?

First of all, we need to discuss whether or not using copyrighted works to train an AI model qualifies as fair use. Fair use is an affirmative defense that is often misunderstood on the internet. It is a defense against infringement, where protections are given to the use of copyrighted material without the permission of the author under certain circumstances.

In order to determine if a use of a copyrighted work qualifies as fair use, courts have to look at four factors with the goal of balancing creators’ exclusive rights to their works and enabling other users to build on those works. These four factors are:

- The purpose and character of the work

- The nature of the copyrighted work

- The amount and substantiality of the work used

- The effect on the market for the protected work

Note that these factors are a balancing test, so determining whether a use is protected by fair use is a case by case analysis done by the courts. As such, it will depend on the specific facts of a case the court is looking at.

Since any given generative AI model uses so much copyrighted work in its training, a lot of what courts would look at involves the model itself, what it does, and how it was trained, rather than any individual works that are part of the data set.

We’ll be looking at each of these factors in turn and what the US Copyright Office has said about them in order to try to understand where a court would conclude that the use of copyrighted works in AI training could be fair use.

Purpose and Character of the Work

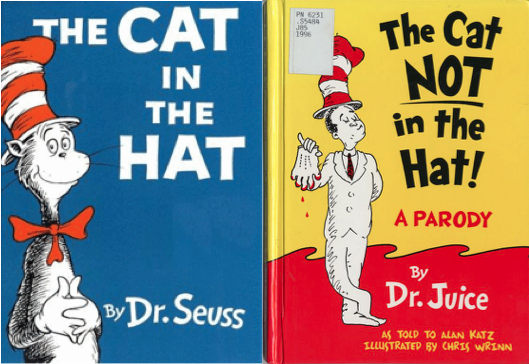

This factor looks at whether the infringing use has a further purpose or different character from the original work. The two main elements are the transformativeness and commerciality of the use.

A use that has a different purpose is more justified because it furthers the goal of copyright, while infringing on a work to produce another work of the same type is less justified. Remixing an existing work to create something new is better than taking something to make something that’s the same as the original work and supersedes it.

The Copyright Office’s report determined that training a generative AI foundation model on a large dataset with lots of different types of data will often be transformative. The purpose is different from the original works, since an AI model is meant to perform a variety of functions while a single work is created to disseminate that specific work.

The question of whether a work is transformative depends on how the model is used and how it is deployed. In these cases, a use is most transformative when it is deployed for research and can be constrained to uses that aren’t substitutes for the original work. On the other hand, training a model to generate outputs that are “substantially similar to the copyrighted works” is not as transformative, as is often seen with AI that can create images or other artistic outputs.

There is one other case that is significantly different, and that is RAG or Retrieval-Augmented Generation. This is where instead of giving an output based on a wide dataset, the model retrieves individual works that would be relevant to the user’s prompt in order to give an answer. Most people have seen this when they ask an AI model such as ChatGPT a question.

This is less likely to be transformative since the purpose is to generate outputs that summarize retrieved copyrighted works like news articles. In these cases, fair use is less likely to apply. As an aside, it is also potentially harmful to the providers of that information, since you’re reading the AI-generated summary instead of the actual source.

Sidenote: Isn’t AI training just like human learning?

The Copyright Office rejected a common argument about the transformative nature of AI training, that AI training is transformative because it is just like human learning. The copyright office believes that this is a flawed argument because the premise itself is flawed.

First of all, fair use does not excuse all human acts done for the purpose of learning, and so computers should not have greater ability to do so. Otherwise all infringement done for the purposes of learning, such as pirating text books, would be fair use. College textbooks would be essentially free, and there would be no reason to produce them. Why would AI get special protections here?

Second, AI learning is different from human learning in ways that are important to copyright analysis, since humans only remember “an imperfect impression of a work” compared to generative AI which involves the creation of perfect copies in its dataset.

The Nature of the Copyrighted Work

When looking at the nature of the copyrighted work, copyright law recognizes that some works are closer to the intended purpose of copyright protection than others. More creative or expressive works are less likely to be found to be fair use than factual and functional ones. AI datasets usually include expressive works, though they often also contain a lot of data that isn’t as protected due to its factual nature.

Once again, generative models take in a lot of data. As such, looking at this factor will depend on the model and what works are at issue. If an individual work is more expressive, it is less likely to be infringement.

The Amount and Substantiality of the Work Used

This factor looks at how much of the work is used in light of the purpose of the use. Copying an entire work is less justifiable than taking only a portion. There are situations where it can still be fair use to use an entire dataset, such as when Google created a searchable database for books1 or to create plagiarism detection software.

Usually AI datasets involve taking the entire work (or almost all of it) and including it in a dataset. More data is usually better in those cases, rather than a selection. The Copyright Office agreed with some commenters that the use of entire works that were protected by copyright is less justifiable than other uses like Google Books. The use of an entire work could be reasonable if there was a transformative purpose.

Another factor that a court would look at would be the amount of the work that is made available to the public. This is another area where AI training is contrasted against a searchable database like Google Books, which only allows users to preview a small selection of the complete work. If there are effective limits on the AI model’s ability to output substantial selections from works in the training data, this could weigh in favor of fair use. Otherwise, if a user asks for a selection from a book or an image and the model can produce a substantial selection from that work, then it is less likely to be fair use.

The Effect on the Market for the Protected Work

This factor would look at the effect of the use on the market or potential market for the copyrighted work. Courts look at not only harm done to the original work, but also of harm to the market for derivative works, such as sequels, spin-offs, or just other similar works that would appeal to fans of the first one. This is the most significant element of fair use analysis.

One aspect that courts would look at would be lost sales, whether actual lost sales or the loss of sales due to a potential market substitution of the infringement. There are many different perspectives on this question concerning the output of generative AI.

As with the previous factor, if the model allows users to output substantial portions of the works that it was trained on, then this can directly result in a loss of sales due to substitution. An AI model that does not have proper safeguards will tend to be less likely to be fair use.

There is also a specific kind of generative AI where generative AI training can directly lead to a loss in sales called retrieval-augmented generation, or RAG. In RAG, an AI model augments its responses by retrieving relevant content during the generation process. You most often see this when asking a question with a generative AI model where it retrieves sources from the internet (such as news or other online content) and summarizes them to generate an answer. This type of generation can result directly in lost clicks to the websites producing the content that is retrieved to augment the output, which means a finding of fair use is much less likely in these cases.

Another case where this factor will be clear-cut is where the protected work has the intended use of AI training, so the market is licensing deals to AI companies. When the dataset has evidence of human selection and arrangement with the intent of licensing to AI training, then this factor will weigh heavily against fair use. Similarly, where the work in the dataset could otherwise be licensed for AI training, there is a negative effect on the market for that work.

Of course, there is also the question of how the actual dataset is created. There have already been cases where the creators of AI models have had to pay out large sums of money because they illegally downloaded the works in their dataset used to train their model, such as Anthropic AI, which will have to pay out $1.5 billion to settle a lawsuit by a group of authors. Those cases tend to be infringement through piracy rather than actual training data.

The copyright office’s report also points out that even if a generative AI’s output isn’t substantially similar to any given copyrighted work, it can still cause competition in the market for that type of work, and that this factor should be read broadly. A good example of this is a game developer using AI to generate assets that would otherwise involve hiring (and paying) a human artist.

Overall, the copying that is involved in training a generative AI system causes significant market harm for the works that are being copied in the dataset in a variety of ways. This harm is what a court will look at when weighing these factors.

Conclusion- Is Training AI Fair Use?

This all raises the final question- is it fair use to train AI? Can AI companies use copyrighted works when training without paying for them? Right now, we can’t really answer this question. This is something that the court system will have to work through and the laws will have to catch up with as it is still a developing field.

Since AI involves many different uses, we can’t really say if all of them would be fair use without the specific facts of a given case. When determining if something is fair use, these are the factors that a court would look at, and there is no mechanical formula.

Some uses may qualify as fair use, such as the recent Bartz v. Anthropic case, some will not, like the also recent case of Thomson Reuters v. Ross Intelligence. Uses for noncommercial research and analysis that also make sure that large portions of the work can be reproduced by the output are more likely to be fair use, while those that are created for commercial purposes or to create a commercial product are less likely to be.

Of course, there are other legal issues surrounding generative AI, especially for game developers. Keep in mind that using generative AI may limit your ability to hold copyright over the generated assets, as well as the requirement to disclose the use of generative AI to customers on Steam.

The law will continue to develop as new technologies are created and specific cases are brought through the court system. It will be interesting to see how fair use doctrines are applied to generative AI.

- See Authors Guild v. Google 804 F.3d 202 (2nd Cir. 2015) ↩︎